BCIs had a big year in the lab. In 2022, they’re coming to the market

Brain-computer interfaces are slowly edging into the mainstream. After a string of scientific breakthroughs , neurotech companies are starting to commercialize their research.

Among them is Blackrock Neurotech , which focuses on restoring functions that have been impaired by disabilities or accidents.

The company is best-known for developing a neural implant that enabled a paralyzed man to control a robotic arm with his mind . In 2022, the Utah-based firm plans to launch its first commercial device.

“From an engineering point of view, all the components are there,” Florian Solzbacher, Blackrock’s co-founder and chairman, tells TNW.

“What we’re going to be launching initially is based on what a few dozen patients have used in the context of clinical studies — just a little smaller and more elegant. Shortly thereafter, we’ll have a fully implantable wireless version as well with even more capability.”

Another company that plans to implant brain chips in humans in 2022 is Elon Musk’s Neuralink.

The startup’s flashy demos and Musk’s bombastic predictions have attracted hyperbolic headlines, but many neuroscientists are skeptical about the work.

They point to the company’s dearth of peer-reviewed papers , lack of innovation , and Musk’s history of overpromising .

Some fear that the tycoon’s brash proclamations about merging humans with AI and giving everyone a brain implant will inhibit progress in the field.

“There’s a concern with Neuralink saying it’s going to become a consumer product,” says Solzbacher. “Hypothetically, that could eventually happen, and as a society we need to address that, but maybe society doesn’t want that? We need to have these discussions.

“Quite frankly, however, this scenario is not even realistic right now. And so those in positions of influence need to be careful not to create unnecessary fear.”

Musk’s involvement can nonetheless attract interest — and investment — in BCIs.

The sector is also benefiting from a range of technological advances, regulatory progress, a growing focus on neurological disorders, and the increasing experience of experts.

Solzbacher emphasizes the importance of moving step-by-step. Blackrock will initially focus on people with the most pressing needs — such ALS and severe tetraplegia — before potentially moving on to depression and other conditions where pharmaceuticals are not working effectively:

While Blackrock and Neuralink are building implants for neurological disorders, other companies are rolling out non-invasive BCIs for mass-market consumers.

Neurable, for instance, recently unveiled a pair of headphones that use brainwave sensors to measure focus levels throughout the day. The startup’s co-founder, Ramses Alcaide, told TNW that the idea is to provide an everyday BCI device:

Analysts expect such non-invasive systems that record brain activity via electrodes on the scalp to dominate the commercial BCI market in the short term. In time, however, implants for able-bodied people may become a reality.

The possibilities are endless. In the distant future, BCIs could create transformative gaming experiences , improve the performance of first respondents — or turbocharge surveillance .

These applications may not arrive soon, but Solzbacher says it’s vital for tech providers to address the issues now:

While there are understandable concerns about BCIs, it would be a great shame if they constrained the immense potential benefits.

Scientists are using AI to predict which lung cancer patients will relapse

A new AI tool could predict which lung cancer patients will suffer a relapse by analyzing genetic data and pathology images.

Pathologists trained the tool to differentiate between immune cells and cancer cells in tumors. This revealed that while some parts of the tumor were packed with immune cells — which they describe as “hot” regions — others appeared completely devoid of them.

The research team, led by Dr Yinyin Yuan of London‘s Institute of Cancer Research, found that patients with a lot of these “cold” regions were more likely to relapse.

After investigating the genetic make-up of the patients, they discovered that cancer cells in cold regions may have evolved more recently than those found in hot regions. They suspect this is because the tumor develops a “cloaking” mechanism to hide from the body’s natural defenses.

Their tool was able to spot how many regions with this cloaking mechanism exist within a tumor.

Dr Yuan said in a statement :

The researchers envision doctors using the tool to predict which patients will suffer a relapse and tailor treatments to their individual needs.

Ultimately, it could improve survival rates for the disease, which currently kills over 35,000 people in the UK every year — making it the most common cause of cancer death in the country .

Google’s teaching AI to ‘see’ and ‘hear’ at the same time — here’s why that matters

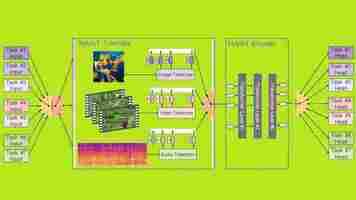

A team of scientists from Google Research, the Alan Turing Institute, and Cambridge University recently unveiled a new state of the art (SOTA) multimodal transformer for AI.

In other words, they’re teaching AI how to ‘hear’ and ‘see’ at the same time.

Up front: You’ve probably heard about transformer AI systems such as GPT-3. At their core, they process and categorize data from a specific kind of media stream.

Under the current SOTA paradigm, if you wanted to parse the data from a video you’d need several AI models running concurrently.

You’d need a model that’s been trained on videos and another model that’s been trained on audio clips. This is because, much like your human ears and eyes are entirely different (yet connected) systems, the algorithms necessary to process different types of audio are typically different than those used to process video.

Per the team’s paper :

Background: What’s incredible here is that the team was not only able to build a multimodal system capable of handling its related tasks simultaneously, but that in doing so they managed to outperform current SOTA models that are focused on a single task.

The researchers dub their system “PolyVit.” And, according to them, it currently has virtually no competition:

Quick take: This could be a huge deal for the business world. One of the biggest problems facing companies hoping to implement AI stacks is compatibility. There are literally hundreds of machine learning solutions out there and there are no guarantees they’ll work together.

This results in monopolistic implementations where IT leaders are stuck with a single vendor for compatibility’s sake or a mix-and-match approach that comes with more headaches than it’s usually worth.

A paradigm where multimodal systems become the norm would be a godsend for weary administrators.

Of course, this is early research from a pre-print paper so there’s no reason to believe we’ll see this implemented widely any time soon.

But it is a great step towards a one-size-fits all classification system, and that’s pretty exciting stuff.

H/t: Synced