Mailchimp wants to optimize your email campaigns using AI — here’s how

Earlier this month, Mailchimp released Content Optimizer, a new product that uses artificial intelligence to help improve the performance of email marketing campaigns.

Thanks to its vast trove of data, Mailchimp is in a unique position to discover common patterns of successful marketing campaigns. Content Optimizer taps into that data and uses machine learning models and business rules to predict the quality of email campaigns and provide suggestions on how to improve content, layout, and imagery.

This is not Mailchimp’s first foray into using AI for content marketing, but it might be its most impactful effort in the field. Leading the effort to develop Content Optimizer is John Wolf, Product Manager of Smart Content at Mailchimp. Wolf was the founder of Inspector 6, a startup acquired by Mailchimp in 2020. The technology and experience that Inspector 6 brought to Mailchimp played an important role in the development of Content Optimizer.

In an interview with TechTalks, Wolf provided some behind-the-scenes details on the vision and development process of Content Optimizer and shared insights on how AI is changing the future of content marketing.

The vision for AI-powered content marketing

Like many products, the idea for Content Optimizer started with someone feeling the pain. Wolf spotted the need for AI-powered content marketing before founding Inspector 6, when he was Chief Marketing Officer at Intradiem, a software development company.

Like all companies, Intradiem needed great marketing content. But the process was difficult, and measuring quality and success was very subjective.

“The creative process was completely dominated by opinions with little data. It was very manual, very labor-intensive, lots of cycles to get the creative right, and I was thinking there just has to be another way,” Wolf said.

At the time, machine learning was starting to find real business applications in many sectors. So, Wolf started to think about using ML to optimize the creative process for content marketing.

“The idea was, what if we could use machine learning to understand marketing content? If a software can understand what story marketing content is telling and how it’s telling it, it can then correlate features with marketing outcomes and start to standardize and add data to much of the creative process and replace those opinions with data,” Wolf said.

In 2017, Wolf founded Inspector 6 with the vision of developing AI-powered content marketing. Inspector 6 became an AI platform that analyzes marketing content to deliver insights and recommendations for improvement.

Meeting data challenges

Like all applied machine learning applications , marketing content optimization hinges on having large amounts of quality data. Accordingly, Inspector 6’s platform was successful in some areas and met challenges in others.

“Our largest customer was a very big multi-national consumer packaged goods company with 500 brands in 200 countries, and I thought they would have plenty of data to power my predictive models. And I found that it wasn’t necessarily true,” Wolf said. Providing value for small businesses was more difficult since they had even more limited data for training machine learning models.

Before Mailchimp acquired Inspector 6, the two companies entered a partnership. Mailchimp delivers hundreds of billions of emails per year, and through the partnership, the Inspector 6 team got to experience the advantage of training its models on the huge volume of marketing data that Mailchimp has.

“The amount of data and the breadth of data, everything from marketing content that is selling products and services to content marketing and newsletters and everything in-between, some very high-performing and some just as importantly low-performing—having that depth and breadth of data becomes the key component to delivering a solution like this,” Wolf said.

Recommendations also require context. An analyst would have to know the goal, business vertical, audience, and other information about a marketing campaign before providing feedback on its efficiency. Likewise, machine learning models require context. Fortunately for Mailchimp, the variety and number of customers it has provided ample data to train machine learning models that can perform well across different contexts.

“You take Mailchimp’s data with 360 billion emails a year that become our training set for this, but then you’d have to slice it by context to really be able to solve this problem,” Wolf said. “So basically, the 360 billion emails start to become a collection of many individual training sets that are context-specific.”

Transitioning to Mailchimp

The partnership between Mailchimp and Inspector 6 eventually turned into an acquisition proposal, which became a win-win situation for both companies.

Mailchimp’s data and infrastructure gave the Inspector 6 team the opportunity to expand its application to a wider base of customers

“Having gone through the experience of knowing how much data my predictive models were going to require and how much data Mailchimp had, I thought it could be really interesting to now solve this problem that I’m solving mostly for a handful of large multinationals for millions or tens of millions of small businesses, which is more aligned with my passions,” Wolf said.

On the other hand, Mailchimp got to boost its AI efforts by acquiring a tried-and-tested backend technology stack for predicting marketing campaign outcomes and a team of engineers who were focused on the intersection of marketing, computer science, and data science.

“Mailchimp has experience in all of those areas, but to have a focus on solving this problem at that intersection is what Mailchimp was most interested in acquiring from a talent standpoint,” Wolf said.

Inspector 6 had architected its technology as individual microservices on Amazon Web Services. The system ingests a marketing asset, and the individual microservices independently do their job to analyze it.

Mailchimp, on the other hand, uses the Google Cloud Platform. So, the services had to be transferred from one cloud platform to another. Fortunately, before the acquisition, Mailchimp had undergone a massive project to port all their data into Google BigQuery, a cloud-based data warehouse that makes it easy to manage large stores of information and use them in data analysis and machine learning pipelines. Mailchimp also uses other GCP products such as Dataflow, a streaming analytics service that creates dynamic views of real-time and stored data in very efficient ways.

This made it much easier to integrate Inspector 6’s services into Mailchimp’s cloud infrastructure.

“From a technology standpoint, we went from one collection of microservices to another, and that worked pretty well,” Wolf said.

Inspector 6’s microservices are an enabling technology. They are integrated into the backend of Mailchimp’s system and offered through frontend products. The services started with providing reporting services to Mailchimp but gradually developed into becoming a generator of content insights through frontend product groups. And Wolf’s vision of AI-powered optimization of marketing campaigns, which kicked off Inspector 6, eventually became Mailchimp’s Content Optimizer.

Machine learning and business rules

Content Optimizer provides scorecards that reflect the overall content quality of marketing emails and the number of best practices in each analysis category, like skimmability and layout. All users can access the content scorecard. Premium users also get actionable recommendations to improve their content.

“Our north star in solving this problem is improving campaign performance. If we improve campaign performance across our user base by just 10 percent, that will create 190 million incremental online visits to our customers’ businesses,” Wolf said.

Naturally, machine learning is a key component of Content Optimizer. Behind the scenes, a pipeline of ML models goes to work to parse and analyze different parts of the marketing email and to predict its outcome.

The first batch of models extract the features of different elements of the content such as the tone of writing, the messaging, the layout of the marketing content, and the images used to tell the story.

These features become the input of the next series of machine learning models, which try to predict the outcome and quality of the marketing campaign. In some areas, Content Optimizer combines ML predictions with symbolic AI to provide recommendations that are more robust and understandable.

“Beyond machine learning, sometimes we use a hybrid of machine learning models and business rules to detect things,” Wolf said. “Sometimes we found that business rules are actually easier to maintain, easier to develop, and in some cases more accurate than machine learning.”

For example, a “call to action” is a key component of any marketing asset. Most successful call-to-action sentences start with a verb of a certain form. That business rule performs very well, the Content Optimizer team found. So, in this case, they use ML libraries to detect parts of speech in CTA text and feed the parsed data to a rule-based system that evaluates its quality based on static rules.

Human oversight

While the machine learning models provide valuable insights, they can’t work autonomously yet. For the moment, Mailchimp uses human operators to make sure the output provided by Content Optimizer makes sense and would be in line with recommendations a creative director would make.

“We go through a traditional predictive modeling exercise, but then there’s a manual vetting process,” Wolf said. “That creates inefficiency in the process, but we feel it’s necessary at this point.”

There is some controversy around putting human operators behind AI systems. Sometimes, it’s called the “ Wizard of Oz technique ” or pseudo-AI. But in our conversation, Wolf was very transparent about it, and he believes that it will be an important factor in the success of the product. Moreover, the company is not outsourcing the task and is carrying it out entirely through internal resources.

“In the early days of applied AI, I think the risk of losing credibility with our users by making a recommendation that is just out of bounds and doesn’t make sense is too great that we want to be incredibly careful and sensitive,” Wolf said.

As the Content Optimizer gathers more data and feedback, the team will gradually finetune the machine learning models and figure out how to make them less reliant on human assistance.

“It takes time. It adds a labor-intensive element. But it’s an area we’re willing to put people against,” Wolf said.

It’s not guaranteed that the task will be fully automated. But at the end of the day, a machine learning product is, like all products, a tool to solve problems with better outcomes, at greater speeds, and at lower costs. If Content Optimizer helps Mailchimp improve the campaign performance of its clients in a statistically significant and cost-efficient way, then it is a successful product regardless of how much human effort it requires. A notable example in this regard is AdWords, Google’s online advertising platform and its greatest source of revenue. AdWords uses a combination of AI and human evaluation to make sure ads are relevant and compliant with the company’s policies.

Learning from users

One of the key parts of the product management process is learning from users. After launching a product, your hypotheses will be put to test. You’ll usually find pain points that you had overestimated or overlooked and interesting use cases that you had not thought about.

For example, the Content Optimizer showed that in general, Mailchimp users did a better job at typography than the product team had initially estimated. They also found that many marketers struggle with writing simple and concise language.

“It’s almost like the collective system is the creative director for 14 million active users and you need to be the creative director for them,” Wolf said. “Sometimes they’ll surprise you with what they’re great at and what they’re still struggling with.”

One of the positive outcomes of the Content Optimizer, according to Wolf, is that marketers have already become comfortable with using the product.

“When you put a new product on the market, you expect a lot of questions about ‘What is this?’ and ‘How is it done?’” he said. But when people are using Content Optimizer, their conversations are more about marketing and less about the product, he says, which has been a nice surprise.

“If they go straight to the marketing conversation and what they’re going to do differently in the future, that’s the exact goal. They’ve taken the product and understood it really well,” Wolf said.

The future of AI-powered content marketing

According to Wolf, his team will keep on expanding Content Optimizer to provide a wider variety of recommendations in tone, messaging, brand consistency, imagery, and other areas. The product will also expand from email marketing to other channels such as web pages and social media. The touchpoints will also increase in the future. For the moment, Content Optimizer is a reporting tool, but the team plans to also make it available as a real-time recommendation system that operates while users are editing their content.

Wolf is also interested in getting into computer-generated content in the future.

“Even the most sophisticated marketers in the world would love to spend less time generating content,” he says. “Everyone is familiar with cutting-edge copywriting generative models like GPT-3 . Those are great. But how do you make sure they’re on-brand, on-message, and they’re optimized in the same way that we’re optimizing human-generated content.”

Generative models struggle with consistency and coherence when used in isolation. But Wolf believes that the combination of the Content Optimizer pipeline and generative models like GPT-3 can create immense value for marketers.

“Our customers spend 28 million hours a year writing copy alone, not even designing and sourcing images. We think with some technologies in the generative space, we can decrease that by 80 percent. That’s 22 million hours we can save our customers,” he said. “That to me is just staggering and it’s one of the things I found most compelling about selling my business to Mailchimp, just to be able to create value at that scale. We’re really excited about what the future holds and we’re really just getting going.”

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech, and what we need to look out for. You can read the original article here.

Algorithm estimates COVID-19 infections in the US are three times higher than reported

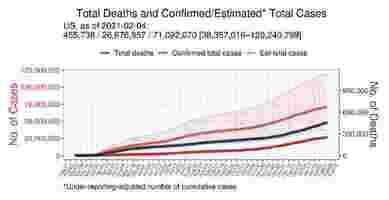

An algorithm developed at the UT Southwestern medical center has estimated that there are almost three times as many COVID-19 infections in the US than the number of confirmed cases.

The model makes daily predictions of both total and current infections across the US and in the 50 countries worst affected by the virus.

It calculated that more than 71 million people in the US had contracted COVID-19 by February 4, when there were only 26.7 million confirmed cases.

The model also estimated that 7 million people in the country currently have infections and are potentially contagious.

In other countries, the algorithm also calculated far higher numbers of infections than those reported. In the UK, it calculated that there were nearly 25 million — rather than around 4 million confirmed cases — while in Mexico, it predicted that there were almost 27.6 million instead of 1.9 million.

“The estimates of actual infections reveal for the first time the true severity of COVID-19 across the U.S. and in countries worldwide,” said study author Jungsik Noh in a statement .

The algorithm’s calculations are derived from the number of reported deaths, rather than the amount of lab-confirmed cases.

It then assumes that the infection fatality rate is 0.66%, based on early pandemic data in China .

It also examines other factors, such as the average days it takes for someone with symptoms to either die or recover.

Finally, it compares its predictions with the number of publicly-reported cases to calculate a ratio of confirmed-to-estimated infections.

Noh compared his early findings with existing prevalence rates found in studies that used blood tests to check for antibodies to the SARS-CoV-2 virus.

He found that the algorithm’s estimates closely corresponded to the percentage of people who tested positive for antibodies.

Noh admits that the estimates are rough, due to the uncertainty of factors such as the COVID-19 death rate. Nonetheless, he says they’re more accurate than the confirmed cases used to guide public health policies:

You can read the study paper in the journal PLOS ONE .