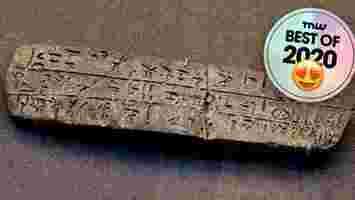

New MIT algorithm automatically deciphers lost languages

A new AI system can automatically decipher a lost language that’s no longer understood — without knowing its relationship to other languages.

Researchers at MIT CSAIL developed the algorithm in response to the rapid disappearance of human languages. Most of the languages that have existed are no longer spoken, and at least half of those remaining are predicted to vanish in the next 100 years .

The new system could help recover them. More importantly, it could preserve our understanding of the cultures and wisdom of their speakers.

The algorithm works by harnessing key principles from historical linguistics, such as the predictable ways in which languages use sound substitutions. The researchers give the example of a word with a “p” in a parent language possibly changing to a “b” in its descendent, but probably not to a “k” due to the difference in pronunciation.

These kinds of patterns are then turned into computational constraints. This allows the model to segment words from an ancient language and map them to a related language.

The algorithm can also identify different language families. For example, their method suggested that Iberian is not related to Basque, supporting recent scholarship.

The project was led by MIT Professor Regina Barzilay, who last month won a $1 million award from the world’s largest AI society for her pioneering work on drug development and breast cancer detection.

She now wants to expand the work to identifying the semantic meaning of words — even if we don’t know how to read them.

“For instance, we may identify all the references to people or locations in the document which can then be further investigated in light of the known historical evidence,” Barzilay said in a statement.

“These methods of ‘entity recognition’ are commonly used in various text processing applications today and are highly accurate, but the key research question is whether the task is feasible without any training data in the ancient language.”

You can read the full research study here .

Is there a more environmentally friendly way to train AI?

Machine learning is changing the world, and it’s changing it fast. In just the last few years, it’s brought us virtual assistants that understand language, autonomous vehicles , new drug discoveries , AI-based triage for medical scans, handwriting recognition and more.

One thing that machine learning shouldn’t be changing is the climate.

The issue relates to how machine learning is developed. In order for machine learning (and deep learning) to be able to accurately make decisions and predictions, it needs to be ‘trained’.

Imagine an online marketplace for selling shoes, that’s been having a problem with people trying to sell other things on the site – bikes and cats and theater tickets. The marketplace owners decide that they want to limit the site to shoes only, by building an AI to recognize photos of shoes and refusing any listing without shoes in the picture.

The company collects tens of thousands of photos of shoes, and a similar number of photos with no shoes. It hires data scientists to design a complicated mathematical model and convert it into code. And then they start training their shoe-detecting machine learning model.

This is the vital part: the computer model looks at all the pictures of shoes and tries to work out what makes them “shoey”. What do they have that the non-shoe pictures don’t? Without getting too bogged down in technical details, this process takes a lot of computing resources and time. Training accurate machine learning models means running multiple chips like GPUs, at full power, 24 hours a day for weeks or months as the models are trained, tweaked and refined.

As well as time and expense, AI training uses a lot of energy. Modern computer chips use only minimal power when they are idle, but when they’re working at full capacity they can burn through electricity, generating masses of waste heat (which also needs to be pumped out using cooling systems that use, yup, more energy).

Any major energy use has implications for climate change, as most of our electricity is still generated from fossil fuels, producing carbon dioxide as they burn. One recent study from the University of Massachusetts claimed that training a single advanced language-processing AI produced 626,000lb of CO2, the same amount as five cars would produce over their lifetimes!

In fact, a team from Canada’s Montreal Institute for Learning Algorithms (MILA) released the machine learning emissions calculator in December of last year to help researchers in the field of AI estimate how much carbon is produced in training their machine learning models.

This problem is getting worse, as data scientists and engineers solve more complicated AI problems by throwing more power at them, using bigger and more expensive computing to solve hard problems rather than focusing on efficiency.

GPT-3, the AI-powered language model recently released by OpenAI, was trained on 45 terabytes of text data (the entirety of the English Wikipedia, spanning some 6 million articles, makes up only 0.6 percent of its training data ) with the environmental costs of this hyper-powerful machine-learning technology still unknown.

To be fair, other computing processes are also on a worrying trajectory. A study by ICT specialist Anders Andrae found that according to his most optimistic projections, by 2030, the ICT industry, which delivers Internet, video, voice and other cloud services would be responsible for 8% of the total worldwide energy demand while his realistic projection put that number at 21% – with data centers using more than one-third of that.

One of the key recommendations of the University of Massachusetts research for reducing the waste caused by AI training was “ a concerted effort by industry and academia to promote research of more computationally efficient algorithms, as well as hardware that requires less energy ”.

Software can also be used to increase hardware efficiency, thereby reducing the computational power needed for AI models but perhaps the biggest impact will come from the use of renewable energy sources for the data centers themselves. Facebook’s Odense, Denmark data center is said to run completely on renewable energy sources. Google has its own energy-efficient data centers, like this one in Hamina , Finland.

In the very long term, as the world’s industrial economies move away from fossil fuels, perhaps the link between computational load and CO2 production will be broken and perhaps all machine learning will be carbon-neutral. Even longer-term, deep learning on weather and climate patterns could help humanity gain a better understanding of how to combat and even reverse climate change.

But until then, responsible businesses should consider the carbon impact of their new technologies including machine learning, and take steps to measure the carbon cost of their model development by upgrading efficiency in development, software and hardware.

Troubled facial recognition startup Clearview AI pulls out of Canada — before it was pushed

Dystopian surveillance firm Clearview AI has stopped offering its facial recognition service in Canada, in response to a probe by data protection authorities.

“The investigation of Clearview by privacy protection authorities for Canada, Alberta, British Columbia, and Quebec remains open,” the Office of the Privacy Commissioner of Canada said in a statement .

The probe was opened in February, following reports that Clearview was collecting personal information without consent. The authorities still plan to issue findings from the investigation.

The exit is a big blow to the New York-based startup. Outside of the US, Clearview’s biggest market had been Canada. More than 30 law enforcement agencies in the country had used the software, including the Toronto Police Service and the Royal Canadian Mounted Police (RCMP).

According to Canada‘s privacy watchdog, the RCMP had been its last remaining client in the country. The suspension of that contract brings Clearview’s Canadian adventure to an end — at least for now.

Clearview on the way out?

Amid the protests against police brutality and racism triggered by the killing of George Floyd, tech giants have publicly stepped back from facial recognition.

Not good old Clearview AI though. The startup still wants to sell its app to law enforcement agencies — all for the good of humanity, of course.

“While Amazon, Google, and IBM have decided to exit the marketplace, Clearview AI believes in the mission of responsibly used facial recognition to protect children, victims of financial fraud, and other crimes that afflict our communities,” CEO Hoan Ton-That said last month.

Indeed, Big Tech’s departure from the market left a gaping facial recognition hole that Clearview could have filled. But regulators are starting to shove the company out before it can squeeze any further in.

In Canada, it looks like Clearview chose to jump before it was pushed.