Researchers propose ‘ethically correct AI’ for smart guns that locks out mass shooters

A trio of computer scientists from the Rensselaer Polytechnic Institute in New York recently published research detailing a potential AI intervention for murder: an ethical lockout.

The big idea here is to stop mass shootings and other ethically incorrect uses for firearms through the development of an AI that can recognize intent, judge whether it’s ethical use, and ultimately render a firearm inert if a user tries to ready it for improper fire.

That sounds like a lofty goal, in fact the researchers themselves refer to it as a “blue sky” idea, but the technology to make it possible is already here.

According to the team’s research :

The research goes on to explain how recent breakthroughs involving long-term studies have lead to the development of various AI-powered reasoning systems that could serve to trivialize and implement a fairly simple ethical judgment system for firearms.

This paper doesn’t describe the creation of a smart gun itself, but the potential efficacy of an AI system that can make the same kinds of decisions for firearms users as, for example, cars that can lock out drivers if they can’t pass a breathalyzer.

In this way, the AI would be trained to recognize the human intent behind an action. The researchers describe the recent mass shooting at a Wal Mart in El Paso and offer different view of what could have happened:

This paints a wonderful picture. It’s hard to imagine any objections to a system that worked perfectly. Nobody needs to load, rack, or fire a firearm in a Wal Mart parking lot unless they’re in danger. If the AI could be developed in such a way that it would only allow users to fire in ethical situations such as self defense, while at a firing range, or in designated legal hunting areas, thousands of lives could be saved every year.

Of course, the researchers certainly predict myriad objections. After all, they’re focused on navigating the US political landscape. In most civilized nations gun control is common sense.

The team anticipates people pointing out that criminals will just use firearms that don’t have an AI watchdog embedded:

Clearly the contribution here isn’t the development of a smart gun, but the creation of an ethically correct AI. If criminals won’t put the AI on their guns, or they continue to use dumb weapons, the AI can still be effective when installed in other sensors. It could, hypothetically, be used to perform any number of functions once it determines violent human intent.

It could lock doors, stop elevators, alert authorities, change traffic light patterns, text location-based alerts, and any number of other reactionary measures including unlocking law enforcement and security personnel’s weapons for defense.

The researchers also figure there will be objections based on the idea that people could hack the weapons. This one’s pretty easily dismissed: firearms will be easier to secure than robots, and we’re already putting AI in those.

While there’s no such thing as total security, the US military fills their ships, planes, and missiles with AI and we’ve managed to figure out how to keep the enemy from hacking them. We should be able to keep police officers’ service weapons just as safe.

Realistically, it takes a leap of faith to assume an ethical AI can be made to understand the difference between situations such as, for example, home invasion and domestic violence, but the groundwork is already there.

If you look at driverless cars, we know people have already died because they relied on an AI to protect them. But we also know that the potential to save tens of thousands of lives is too great to ignore in the face of a, so far, relatively small number of accidental fatalities.

It’s likely that, just like Tesla’s AI , a gun control AI could result in accidental and unnecessary deaths. But approximately 24,000 people die annually in the US due to suicide by firearm, 1,500 children are killed by gun violence, and almost 14,000 adults are murdered with guns . It stands to reason an AI-intervention could significantly decrease those numbers.

You can read the whole paper here .

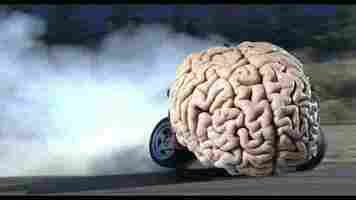

Is ‘brain drift’ the key to machine consciousness?

Think about someone you love and the neurons in your brain will light up like a Christmas tree. But if you think about them again, will the same lights go off? Chances are: the answer’s no. And that could have big implications for the future of AI.

A team of neuroscientists from the University of Columbia in New York recently published research demonstrating what they refer to as “representational drift” in the brains of mice.

Per the paper :

Up front: What’s interesting here is that, in lieu of a better theory, it’s been long believed that neurons in the brain associate experiences and memories with static patterns. In essence, this would mean that when you smell cotton candy certain neurons fire up in your brain and when you smell pizza different ones do.

And, while this is basically still true, what’s changed is that the scientists no longer believe that the same neurons fire up when you smell cotton candy as did the last time you smelled cotton candy.

This is what “representation drift,” or “brain drift” as we’re calling it, means. Instead of the exact same neurons firing up every time, different neurons across different locations fire up to represent the same concept.

The scientists used mice and their sense of smell in laboratory experiments because it represents a halfway point between the ephemeral nature of abstract memories (what does London feel like?) and the static nature of our other brain connections (our brain’s connection to our muscles, for example).

What the team found was that, despite the fact that we can recognize objects by smell, our brain perceives the same smells differently over time. What you smell one month will have a totally different representation a month later if you take another whiff.

The interesting part: The scientists don’t really know why. This is because they’re bushwhacking a path where few have trod. There just isn’t much in the way of data-based research on how the brain perceives memory and why some memories can seemingly teleport unchanged across areas of the brain.

But perhaps most interesting are the implications. In many ways our brains function similar to binary artificial neural networks. However, the distinct differences between our mysterious gray matter and the meticulously plotted AI systems human engineers build may be where we find everything we need to reverse engineer sentience, consciousness, and the secret of life.

Quick take: According to the scientists’ description, the human brain appears to tune in memory associations over time like an FM radio in a car. Depending on how time and experience has changed you and your perception of the world, your brain may just be readjusting to reality in order to integrate new information seamlessly.

This would indicate we don’t “delete” our old memories or simply update them in place like replacing the contents of a folder. Instead, we re-establish our connection with reality and distribute data across our brain network.

Perhaps the mechanisms driving the integration of data in the human brain – that is, whatever controls the seemingly unpredictable distribution of information across neurons – is what’s missing from our modern-day artificial neural networks and machine learning systems.

You can read the whole paper here .

Stunning astronomical study indicates our galaxy may be teeming with life

The search for extraterrestrial life may not take us very far from home. Astronomers from the University of Copenhagen recently published an incredible study demonstrating there’s a high likelihood the Milky Way is absolutely flooded with potentially life-bearing planets.

Published in Science Advances, the team’s paper entitled “A pebble accretion model for the formation of the terrestrial planets in the Solar System,” lays out and attempts to validate a theory that planets are formed by tiny, millimeter-sized pebbles massing together over time.

According to the researchers:

The big idea here involves ice pebbles being present at the earliest formations of planets. The scientists believe Earth, Mars, and Venus were formed this way and project we’ll find the same scenario in most other exoplanets. And that means, where it was once believed that only Earth or a few Earth-like worlds may have water, now it’s possible that most planetary bodies have some form of water on them.

Per a university press release , lead researcher Anders Johansen says carbon-based life may be a much more frequent occurrence than once thought:

The next generation of telescopes should extend our field of vision beyond our own solar system and start giving us some real data on the chemical and topographical make up of exoplanets orbiting other stars. And, thanks to a plethora of machine learning and artificial intelligence breakthroughs, the search for habitable worlds featuring the building block of life has entered an exciting new era.

Quick take: This is an exciting time to be an ET lover! This is among the biggest near-concrete indicators there could be more Earth-like planets out there.

Where we’ve long hoped to find so much as a sign than some dusty, cold rock out there once hosted some simple, space-faring, single-celled organisms, now serious scientists have license to hypothesize fantastic planets full of ocean life, ice critters, or even surface oceans separated by life-filled continents. And all right next door.

It’s looking less and less like we’re alone in the universe and more and more like we could discover some form of life on other planets within a matter of decades.

Read the whole paper here .