Scientists propose first steps toward creating life on Mars

The moment humankind stepped out from our caves and gazed upon the night sky with curiosity and wonder, our ancestors set into motion a chain of events that will almost certainly end up with humans walking on Martian soil.

And, if you believe the current hype, that day could come in the next couple of decades. NASA has every intention of sending a crewed mission to the red planet sometime in the 2030s.

In the meantime, those of us who remain stuck here on Earth are left to wonder what such an accomplishment would ultimately mean for humankind.

Is Elon Musk right? Is it imperative to the future of humanity that we become a “multiplanet species” as soon as possible?

And, if so, what exactly does that mean? How do we take a barren, rocky planet that makes Antarctica look like a tropical resort and turn it into the kind of place people might want to raise their children?

The answer’s simple: terraforming. Basically, we use science and engineering to figure out a way to make Mars more like Earth.

Of course we, as a species, don’t have the necessary technology to terraform planets. If we did, we might use it to stop our own from deteriorating to the point of no return due to human-caused climate shift.

But, let’s assume we eventually figure terraforming out. What would it look like?

According to a team of researchers from NASA, RAL space lab, and Princeton, the first steps would involve stabilizing the red planet’s magnetosphere.

Per the team’s paper :

The big idea then, would be to figure out a way to kickstart the atmosphere by establishing a magnetosphere like Earth’s.

But, as Universe Today’s Brian Koberlein points out , this is easier said than done:

The research goes on to explain how one viable alternative might be to get one of Mars‘ moons to act as a sort of magnetic particle-generator that charges up the planet from the outside in.

It’s a bit more complex than that, but the gist is that the magnetosphere would form around the planet and, eventually, lead to a stable biosphere. You’d have breathable air, water, and protection from the harmful radiation that would currently make residing on Mars a living nightmare.

It’s perfectly reasonable to imagine flora and fauna thriving on the red planet within a matter of centuries. And it goes without saying that such an environment should be able to support human life.

And this has some interesting philosophical implications. Currently, there’s a scientific consensus that humans have not discovered definitive proof of intelligent life outside of our own planet.

But even if we never discover alien life, there could be life on other planets in a few hundred years. What if, by the year 2500, the unique conditions we’ve cultivated on Mars result in bespoke plants and animals?

Humans would have propagated the first known seeds of life beyond Earth and, arguably, established evidence for our own species as the progenitors of intelligent life in the universe.

After all, if we can terraform Mars, there’s nothing stopping us from doing the same to other planets. In fact, the team working on the magnetosphere problem envision practical uses for the technology beyond just making planets livable.

Per the paper:

Solving the deep space radiation problem using craft-sized magnetospheres would be a game-changer for interplanetary travel and, ultimately, a necessary foundation for crewed missions into deep space.

The researchers do caution that this study isn’t meant to justify the need for such technologies, but to discuss the potential applications and engineering concerns as a sort of conversation-starter.

It’s likely we’re centuries from growing tomatoes on the red planet. But it’s never too soon to imagine how we go from where we are now to eating Martian marinara sauce.

Researchers fooled AI into ignoring stop signs using a cheap projector

A trio of researchers at Purdue today published pre-print research demonstrating a novel adversarial attack against computer vision systems that can make an AI see – or not see – whatever the attacker wants.

It’s something that could potentially affect self-driving vehicles, such as Tesla’s, that rely on cameras to navigate and identify objects.

Up front: The researchers wanted to confront the problem of digital manipulation in the physical world. It’s easy enough to hack a computer or fool an AI if you have physical access to it, but tricking a closed system is much harder.

Per the team’s pre-print paper :

Background: There are a lot of ways to try and trick an AI vision system. They use cameras to capture images and then run those images against a database to try and match them with similar images.

If we wanted to stop an AI from scanning our face we could wear a Halloween mask. And if we wanted to stop an AI from seeing at all we could cover its cameras. But those solutions require a level of physical access that’s often prohibitive for dastardly deed-doers.

What the researchers have done here is come up with a novel way to attack a digital system in the physical world.

They use a “low-cost projector” to shine an adversarial pattern – a specific arrangement of light, images, and shadows — that tricks the AI into misinterpreting what it’s seeing.

The main point of this kind of research is to discover potential dangers and then figure out how to stop them. To that end, the researchers say they’ve learned a great deal about mitigating these kinds of attacks.

Unfortunately, this is the kind of thing you either need infrastructure in place to deal with or you have to train your systems to defend against it ahead of time. Hypothetically-speaking, that means its possible this attack could become a live threat to camera-based AI systems at any moment.

That possibility exposes a major flaw in Tesla‘s vision-only system (most other manufacturers’ autonomous vehicle systems use a combination of different sensor types).

While it’s arguable the company’s vehicles are the most advanced on Earth, there’s no infrastructure in place to mitigate the possibility of a projector attack on a moving vehicle’s vision systems.

Quick take: Let’s not get too hasty in our judgment of Tesla‘s decision-making when it comes to going vision-only. Having a single point of failure is obviously a bad thing, but the company’s got bigger things to worry about right now than this particular type of attack.

First, the researchers didn’t test the attack on driverless vehicles. It’s possible that big tech and big auto both have this sort of thing figured out – we’ll have to wait and see if the research crosses over.

But that doesn’t mean it’s not something that could be adapted or developed to be a threat to any and all vision systems. A hack that can fool an AI system on a laptop into thinking a stop sign is a speed limit sign can, at least potentially, fool an AI system in a car into thinking the same thing.

Secondly, the other potential uses for this kind of adversarial attack are pretty terrifying too.

The research concludes with this statement:

After which the researchers acknowledge that the work was partially funded by the US Army. It’s not hard to imagine why the government would have a vested interest in fooling facial recognition systems.

You can read the whole paper here on arXiv .

How Baidu’s AI produces news videos using just a URL

AI for news production is one of the areas that has drawn contrasting opinions. On one hand, it might help media houses produce more news in a better format with minimal effort, on the other hand, it might take away the human element of journalism or take people out of jobs.

In 2018, an AI anchor developed by China’s Xinhua news agency made its debut . Earlier this month, the agency released an improved version that mimics human voices and gestures . There’s been advancement in AI with text-based news with algorithms writing great headlines . China’s search giant Baidu has developed a new AI model called Vidpress that brings video and text together by creating a clip based on articles.

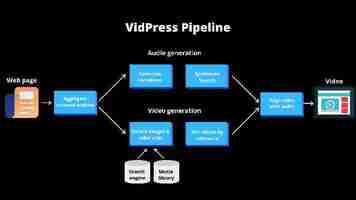

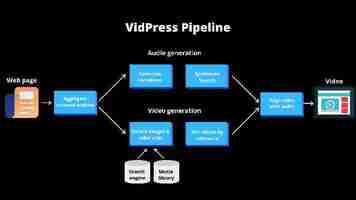

The company has currently deployed Vidpress on its short videos app Haokan and only works with Mandarin language. It claims that the AI algorithm can produce up to 1,000 videos per day, which is a whole lot more than the 300-500 its human editors are currently putting out. Vidpress can create a two-minute 720p video in two and a half minutes, while human editors take an average of 15 minutes to do that task.

To train this model, Baidu used thousands of articles online to understand context of a news story. Additionally, the company had to train AI models for voice and video generation separately. However, in the final step, the algorithm syncs both streams for a smooth final video.

When you feed the AI algorithm a URL, it automatically fetches all related articles from the internet and creates a summary . For instance, if your input is a story on Apple launching a new iPhone, the AI will fetch all details related to the launch including specs, and price. Now, to create a video, it will search for related pictures and clips in your media library and on the web.

Baidu said its AI can also detect trends on social media and create videos on related topics. The company says it aims to provide Baidu to news agencies and creators so that they can turn their posts into videos with synthesized narratives.

The search giant said the AI only looks at most trusted sources to create content but didn’t provide a list of those sources. While the company is running this model in a controlled environment right now, it might have to put certain guardrails when it’s released to more people. Moreover, it will also need to assign editors to check if someone’s producing videos that spread misinformation or violating copyrights.