The first WHO report on AI in healthcare is a mixed bag of horror and delight

The World Health Organization today issued its first-ever report on the use of artificial intelligence in healthcare.

The report is 165 pages cover-to-cover and it provides a summary assessment of the current state of AI in healthcare while also laying out several opportunities and challenges.

Most of what the report covers boils down to six “guiding principles for [AI’s] design and use.”

Per a WHO blog post , these include:

Protecting human autonomy

Promoting human well-being and safety and the public interest

Ensuring transparency, explainability and intelligibility

Fostering responsibility and accountability

Ensuring inclusiveness and equity

Promoting AI that is responsive and sustainable

These bullet points make up the framework for the report’s exploration of the current and potential benefits and dangers of using AI in healthcare.

The good news

The report focuses a lot of attention on cutting through hype to give analysis on the present capabilities of AI in the healthcare sector. And, according to the report, the most common use for AI in healthcare is as a diagnostic aid.

Per the report:

The WHO anticipates this will soon change.

Per the report, the WHO expects AI to improve nearly every aspect of healthcare from diagnostic accuracy to improved record-keeping. And there’s even hope it could lead to drastically improved outcomes for patients presenting with stroke, heart attack, or other illnesses where early diagnosis is crucial.

Furthermore, AI is a data-based technology. The WHO believes the onset of machine learning technologies in healthcare could help predict the spread of disease and possibly even prevent epidemics in the future.

It’s obvious from the report that the WHO is optimistic for the future of AI in healthcare. However, the report also details numerous challenges and risks associated with the wide-scale implementation of AI technologies into the healthcare system.

The bad news

The report recognizes efforts on behalf of numerous nations to codify the use of AI in healthcare, but it also notes that current policies and regulations aren’t enough to protect patients and the public at large.

Specifically, the report outlines several areas where AI could make things worse. These include modern day concerns such as handing care of the elderly over to inhuman automated systems. And they also include future concerns: what happens when a human doctor disagrees with a black box AI system? If we can’t explain why an AI made a decision, can we defend it if its diagnosis when it matters?

And the report also spends a significant portion of its pages discussing the privacy implications for the full implementation of AI into healthcare.

Per the report:

In other words: Even when everything is transparent, how can anyone be sure patients are giving informed consent when it comes to their medical information? When you consider the circumstances many patients are in when a doctor asks them to consent to a procedure, it’s hard to imagine a scenario where the intricacies of how artificial intelligence operates matters more than than what their doctor is recommending.

You can read the entire WHO report here .

DeepMind researchers say reinforcement learning is the key to cracking general AI

In their decades-long chase to create artificial intelligence, computer scientists have designed and developed all kinds of complicated mechanisms and technologies to replicate vision, language, reasoning, motor skills, and other abilities associated with intelligent life. While these efforts have resulted in AI systems that can efficiently solve specific problems in limited environments, they fall short of developing the kind of general intelligence seen in humans and animals.

In a new paper submitted to the peer-reviewed Artificial Intelligence journal, scientists at UK-based AI lab DeepMind argue that intelligence and its associated abilities will emerge not from formulating and solving complicated problems but by sticking to a simple but powerful principle: reward maximization.

Titled “ Reward is Enough ,” the paper, which is still in pre-proof as of this writing, draws inspiration from studying the evolution of natural intelligence as well as drawing lessons from recent achievements in artificial intelligence. The authors suggest that reward maximization and trial-and-error experience are enough to develop behavior that exhibits the kind of abilities associated with intelligence. And from this, they conclude that reinforcement learning, a branch of AI that is based on reward maximization, can lead to the development of artificial general intelligence .

Two paths for AI

One common method for creating AI is to try to replicate elements of intelligent behavior in computers. For instance, our understanding of the mammal vision system has given rise to all kinds of AI systems that can categorize images, locate objects in photos, define the boundaries between objects, and more. Likewise, our understanding of language has helped in the development of various natural language processing systems, such as question answering, text generation, and machine translation.

These are all instances of narrow artificial intelligence , systems that have been designed to perform specific tasks instead of having general problem-solving abilities. Some scientists believe that assembling multiple narrow AI modules will produce higher intelligent systems. For example, you can have a software system that coordinates between separate computer vision , voice processing, NLP, and motor control modules to solve complicated problems that require a multitude of skills.

A different approach to creating AI, proposed by the DeepMind researchers, is to recreate the simple yet effective rule that has given rise to natural intelligence. “[We] consider an alternative hypothesis: that the generic objective of maximising reward is enough to drive behaviour that exhibits most if not all abilities that are studied in natural and artificial intelligence,” the researchers write.

This is basically how nature works. As far as science is concerned, there has been no top-down intelligent design in the complex organisms that we see around us. Billions of years of natural selection and random variation have filtered lifeforms for their fitness to survive and reproduce. Living beings that were better equipped to handle the challenges and situations in their environments managed to survive and reproduce. The rest were eliminated.

This simple yet efficient mechanism has led to the evolution of living beings with all kinds of skills and abilities to perceive, navigate, modify their environments, and communicate among themselves.

“The natural world faced by animals and humans, and presumably also the environments faced in the future by artificial agents, are inherently so complex that they require sophisticated abilities in order to succeed (for example, to survive) within those environments,” the researchers write. “Thus, success, as measured by maximising reward, demands a variety of abilities associated with intelligence. In such environments, any behaviour that maximises reward must necessarily exhibit those abilities. In this sense, the generic objective of reward maximization contains within it many or possibly even all the goals of intelligence.”

For example, consider a squirrel that seeks the reward of minimizing hunger. On the one hand, its sensory and motor skills help it locate and collect nuts when food is available. But a squirrel that can only find food is bound to die of hunger when food becomes scarce. This is why it also has planning skills and memory to cache the nuts and restore them in winter. And the squirrel has social skills and knowledge to ensure other animals don’t steal its nuts. If you zoom out, hunger minimization can be a subgoal of “staying alive,” which also requires skills such as detecting and hiding from dangerous animals, protecting oneself from environmental threats, and seeking better habitats with seasonal changes.

“When abilities associated with intelligence arise as solutions to a singular goal of reward maximisation, this may in fact provide a deeper understanding since it explains why such an ability arises,” the researchers write. “In contrast, when each ability is understood as the solution to its own specialised goal, the why question is side-stepped in order to focus upon what that ability does.”

Finally, the researchers argue that the “most general and scalable” way to maximize reward is through agents that learn through interaction with the environment.

Developing abilities through reward maximization

In the paper, the AI researchers provide some high-level examples of how “intelligence and associated abilities will implicitly arise in the service of maximising one of many possible reward signals, corresponding to the many pragmatic goals towards which natural or artificial intelligence may be directed.”

For example, sensory skills serve the need to survive in complicated environments. Object recognition enables animals to detect food, prey, friends, and threats, or find paths, shelters, and perches. Image segmentation enables them to tell the difference between different objects and avoid fatal mistakes such as running off a cliff or falling off a branch. Meanwhile, hearing helps detect threats where the animal can’t see or find prey when they’re camouflaged. Touch, taste, and smell also give the animal the advantage of having a richer sensory experience of the habitat and a greater chance of survival in dangerous environments.

Rewards and environments also shape innate and learned knowledge in animals. For instance, hostile habitats ruled by predator animals such as lions and cheetahs reward ruminant species that have the innate knowledge to run away from threats since birth. Meanwhile, animals are also rewarded for their power to learn specific knowledge of their habitats, such as where to find food and shelter.

The researchers also discuss the reward-powered basis of language, social intelligence, imitation, and finally, general intelligence, which they describe as “maximising a singular reward in a single, complex environment.”

Here, they draw an analogy between natural intelligence and AGI: “An animal’s stream of experience is sufficiently rich and varied that it may demand a flexible ability to achieve a vast variety of subgoals (such as foraging, fighting, or fleeing), in order to succeed in maximising its overall reward (such as hunger or reproduction). Similarly, if an artificial agent’s stream of experience is sufficiently rich, then many goals (such as battery-life or survival) may implicitly require the ability to achieve an equally wide variety of subgoals, and the maximisation of reward should therefore be enough to yield an artificial general intelligence.”

Reinforcement learning for reward maximization

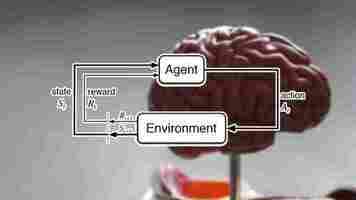

Reinforcement learning is a special branch of AI algorithms that is composed of three key elements: an environment, agents, and rewards.

By performing actions, the agent changes its own state and that of the environment. Based on how much those actions affect the goal the agent must achieve, it is rewarded or penalized. In many reinforcement learning problems, the agent has no initial knowledge of the environment and starts by taking random actions. Based on the feedback it receives, the agent learns to tune its actions and develop policies that maximize its reward.

In their paper, the researchers at DeepMind suggest reinforcement learning as the main algorithm that can replicate reward maximization as seen in nature and can eventually lead to artificial general intelligence.

“If an agent can continually adjust its behaviour so as to improve its cumulative reward, then any abilities that are repeatedly demanded by its environment must ultimately be produced in the agent’s behaviour,” the researchers write, adding that, in the course of maximizing for its reward, a good reinforcement learning agent could eventually learn perception, language, social intelligence and so forth.

In the paper, the researchers provide several examples that show how reinforcement learning agents were able to learn general skills in games and robotic environments.

However, the researchers stress that some fundamental challenges remain unsolved. For instance, they say, “We do not offer any theoretical guarantee on the sample efficiency of reinforcement learning agents.” Reinforcement learning is notoriously renowned for requiring huge amounts of data. For instance, a reinforcement learning agent might need centuries worth of gameplay to master a computer game. And AI researchers still haven’t figured out how to create reinforcement learning systems that can generalize their learnings across several domains. Therefore, slight changes to the environment often require the full retraining of the model.

The researchers also acknowledge that learning mechanisms for reward maximization is an unsolved problem that remains a central question to be further studied in reinforcement learning.

Strengths and weaknesses of reward maximization

Patricia Churchland, neuroscientist, philosopher, and professor emerita at the University of California, San Diego, described the ideas in the paper as “very carefully and insightfully worked out.”

However, Churchland pointed it out to possible flaws in the paper’s discussion about social decision-making. The DeepMind researchers focus on personal gains in social interactions. Churchland, who has recently written a book on the biological origins of moral intuitions , argues that attachment and bonding is a powerful factor in social decision-making of mammals and birds, which is why animals put themselves in great danger to protect their children.

“I have tended to see bonding, and hence other-care, as an extension of the ambit of what counts as oneself—‘me-and-mine,’” Churchland said. “In that case, a small modification to the [paper’s] hypothesis to allow for reward maximization to me-and-mine would work quite nicely, I think. Of course, we social animals have degrees of attachment—super strong to offspring, very strong to mates and kin, strong to friends and acquaintances etc., and the strength of types of attachments can vary depending on environment, and also on developmental stage.”

This is not a major criticism, Churchland said, and could likely be worked into the hypothesis quite gracefully.

“I am very impressed with the degree of detail in the paper, and how carefully they consider possible weaknesses,” Churchland said. “I may be wrong, but I tend to see this as a milestone.”

Data scientist Herbert Roitblat challenged the paper’s position that simple learning mechanisms and trial-and-error experience are enough to develop the abilities associated with intelligence. Roitblat argued that the theories presented in the paper face several challenges when it comes to implementing them in real life.

“If there are no time constraints, then trial and error learning might be enough, but otherwise we have the problem of an infinite number of monkeys typing for an infinite amount of time,” Roitblat said.

The infinite monkey theorem states that a monkey hitting random keys on a typewriter for an infinite amount of time may eventually type any given text.

Roitblat is the author of Algorithms are Not Enough , in which he explains why all current AI algorithms, including reinforcement learning, require careful formulation of the problem and representations created by humans.

“Once the model and its intrinsic representation are set up, optimization or reinforcement could guide its evolution, but that does not mean that reinforcement is enough,” Roitblat said.

In the same vein, Roitblat added that the paper does not make any suggestions on how the reward, actions, and other elements of reinforcement learning are defined.

“Reinforcement learning assumes that the agent has a finite set of potential actions. A reward signal and value function have been specified. In other words, the problem of general intelligence is precisely to contribute those things that reinforcement learning requires as a pre-requisite,” Roitblat said. “So, if machine learning can all be reduced to some form of optimization to maximize some evaluative measure, then it must be true that reinforcement learning is relevant, but it is not very explanatory.”

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech, and what we need to look out for. You can read the original article here .

IBM rolls out an all-new Elite Hybrid Cloud Build team for AI partners

IBM today announced the formation of its Elite Hybrid Cloud Build Team. According to Big Blue, this group of AI and cloud computing experts was assembled to help bring its partners into the cutting edge of hybrid solution systems.

What? While Elite Hybrid Cloud Build Team might sound like a crack squad of futuristic Airborne Seabees, the reality is almost just as cool. Per IBM:

In other words, the EHCBT helps businesses connect their on-premise, on-cloud, and other AI systems no matter what platform they’re on.

Quick take: It’s free… so, there’s not much to dislike. IBM’s heavily-invested in its own ecosystem, so it can afford to do stuff like this. And that’s a good thing for partners and clients who’re looking for a way out of single-vendor solutions.

IBM’s commitment to open-source could also be a factor here, so it’s definitely worth looking into for executives and IT managers who may be considering integrating new tech such as 5G or introducing AI systems to an already-complex stack.

For more information check out IBM’s announcement here .