Why the dream of truly driverless cars is slowly dying

We were told there’d be fully-autonomous vehicles dominating roadways across the globe by 2018. When that didn’t happen, we were told they’d be here by the end of 2020. And when that didn’t happen, we were told they were just around the corner.

It seems like now the experts are taking a more pragmatic approach. According to a recent report from the Wall Street Journal , many computer science experts believe we could be at least a decade away from fully-driverless cars, and some believe they may never arrive.

Driverless vehicle technology is a difficult challenge to solve.

As Elon Musk puts it:

Despite this assessment, Musk continues to push the baseless idea that Tesla’s Full Self Driving feature is on the cusp of turning the company’s cars into fully-autonomous vehicles.

FSD is a powerful driver assistance-feature, but no matter how close we move the goal posts there’s still no indication that Tesla’s any closer to achieving actual full self-driving than any other manufacturer.

In 2014 deep learning saw an explosion in popularity on the back of work from numerous computer scientists including Ian Goodfellow . His work developing and defining the general adversarial network (aka, the GAN, a neural network that helps AI produce outputs by acting as both generator and discriminator) made it seem like nearly any feat of autonomy could be accomplished with algorithms and neural networks.

GANs are responsible for many of the amazingly human-like feats modern AI is able to accomplish including DeepFakes, This Person Does Not Exist , and many other systems.

The modern rise of deep learning acted as a rising tide that lifted the field of artificial intelligence research and turned Google, Amazon, and Microsoft into AI-first companies almost overnight.

Now, artificial intelligence is slated to be worth nearly a trillion dollars by 2028 , according to experts.

But those gains haven’t translated into the level of technomagic that experts such as Ray Kurzweil predicted . AI that works well in the modern world is almost exclusively very narrow, meaning it’s designed to do a very specific thing and nothing else.

When you imagine all the things a human driver has to do – from paying attention to their surroundings to navigating to actually operating the vehicle itself – it quickly becomes a matter of designing either dozens (or hundreds) of interworking narrow AI systems or figuring out how to create a general AI.

The invention and development of a general AI would likely be a major catalyst for solving driverless cars, but so would three wishes from a genie.

This is because general AI is just another way of saying “an AI capable of performing any relative task a human can.” And, so far, the onset of general AI remains far-future technology.

In the near future we can expect more of the same. Driver-assistance technology continues to develop at a decent pace and tomorrow’s cars will certainly be safer and more advanced than today’s. But there’s little reason to believe they’ll be driving themselves in the wild any time soon.

We’re likely to see specific areas set aside in major cities across the world and entire highways designated for driverless vehicle technologies in the next few years. But that won’t herald the era of driverless cars.

There’s no sign whatsoever (outside of the aforementioned Tesla gambit ) that any vehicle manufacturer is approaching even a five year window towards the development of a consumer production vehicle rated to drive fully-autonomously.

And that’s a good indication that we’re at least a decade or more from seeing a consumer production vehicle made available to individual buyers that doesn’t have a steering wheel or means of manual control.

Driverless cars aren’t necessarily impossible. But there’s more to their development than just clever algorithms, brute-force compute, and computer vision.

According to the experts, it’ll take a different type of AI , a different approach all-together , a massive infrastructure endeavor , or all three to move the field forward.

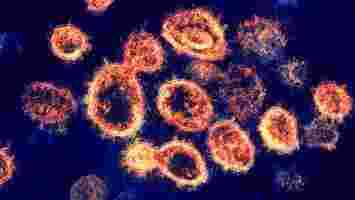

Scientists use AI to identify existing drugs to fight COVID-19

Scientists have used machine learning to find drugs already on the market that could also fight COVID-19 in elderly patients.

“Making new drugs takes forever,” said study co-author Caroline Uhler, a computational biologist at MIT. “Really, the only expedient option is to repurpose existing drugs.”

The study team searched for potential treatments by analyzing changes to gene expressions in lung cells caused by both the disease and aging.

Uhler said this combination could help medical experts find drugs to test on older people:

The researchers sought to answer this question through a three-step process

First, they generated a list of candidate drugs using an autoencoder, a type of neural network that finds data representations in an unsupervised manner.

The autoencoder analyzed two datasets of gene expression patterns to identify drugs that appeared to counteract the virus.

Next, the researchers narrowed down the list by mapping the interactions of proteins involved in the aging and infection pathways. They then identified areas of overlap between the two maps.

This pinpointed the gene expression network a drug should target to fight COVID-19 in older patients.

A causal framework

Finally, the team used statistical algorithms to analyze causality in the network. This allowed them to identify the specific genes a drug should target to minimize the impact of infection.

The system highlighted the RIPK1 gene as a promising target for the COVID-19 drugs. The researchers then found a list of approved drugs that act on RIPK1.

Some of these have been approved for treating cancer, while others are already being tested on COVID-19 patients.

The researchers note that rigorous in vitro experiments and clinical trials are still required to determine their efficacy. But they also envision extending their framework to other infections:

You can read the study paper in Nature Communications .

Computer vision can help spot cyber threats with startling accuracy

This article is part of our reviews of AI research papers , a series of posts that explore the latest findings in artificial intelligence.

The last decade’s growing interest in deep learning was triggered by the proven capacity of neural networks in computer vision tasks. If you train a neural network with enough labeled photos of cats and dogs, it will be able to find recurring patterns in each category and classify unseen images with decent accuracy.

What else can you do with an image classifier?

In 2019, a group of cybersecurity researchers wondered if they could treat security threat detection as an image classification problem. Their intuition proved to be well-placed, and they were able to create a machine learning model that could detect malware based on images created from the content of application files. A year later, the same technique was used to develop a machine learning system that detects phishing websites.

The combination of binary visualization and machine learning is a powerful technique that can provide new solutions to old problems. It is showing promise in cybersecurity, but it could also be applied to other domains.

Detecting malware with deep learning

The traditional way to detect malware is to search files for known signatures of malicious payloads. Malware detectors maintain a database of virus definitions which include opcode sequences or code snippets, and they search new files for the presence of these signatures. Unfortunately, malware developers can easily circumvent such detection methods using different techniques such as obfuscating their code or using polymorphism techniques to mutate their code at runtime.

Dynamic analysis tools try to detect malicious behavior during runtime, but they are slow and require the setup of a sandbox environment to test suspicious programs.

In recent years, researchers have also tried a range of machine learning techniques to detect malware. These ML models have managed to make progress on some of the challenges of malware detection, including code obfuscation. But they present new challenges, including the need to learn too many features and a virtual environment to analyze the target samples.

Binary visualization can redefine malware detection by turning it into a computer vision problem. In this methodology, files are run through algorithms that transform binary and ASCII values to color codes.

In a paper published in 2019 , researchers at the University of Plymouth and the University of Peloponnese showed that when benign and malicious files were visualized using this method, new patterns emerge that separate malicious and safe files. These differences would have gone unnoticed using classic malware detection methods.

According to the paper, “Malicious files have a tendency for often including ASCII characters of various categories, presenting a colorful image, while benign files have a cleaner picture and distribution of values.”

When you have such detectable patterns, you can train an artificial neural network to tell the difference between malicious and safe files. The researchers created a dataset of visualized binary files that included both benign and malign files. The dataset contained a variety of malicious payloads (viruses, worms, trojans, rootkits, etc.) and file types e, .doc, .pdf, t, etc.).

The researchers then used the images to train a classifier neural network. The architecture they used is the self-organizing incremental neural network (SOINN), which is fast and is especially good at dealing with noisy data. They also used an image preprocessing technique to shrink the binary images into 1,024-dimension feature vectors, which makes it much easier and compute-efficient to learn patterns in the input data.

The resulting neural network was efficient enough to compute a training dataset with 4,000 samples in 15 seconds on a personal workstation with an Intel Core i5 processor.

Experiments by the researchers showed that the deep learning model was especially good at detecting malware in .doc and .pdf files, which are the preferred medium for ransomware attacks . The researchers suggested that the model’s performance can be improved if it is adjusted to take the filetype as one of its learning dimensions. Overall, the algorithm achieved an average detection rate of around 74 percent.

Detecting phishing websites with deep learning

Phishing attacks are becoming a growing problem for organizations and individuals. Many phishing attacks trick the victims into clicking on a link to a malicious website that poses as a legitimate service, where they end up entering sensitive information such as credentials or financial information.

Traditional approaches for detecting phishing websites revolve around blacklisting malicious domains or whitelisting safe domains. The former method misses new phishing websites until someone falls victim, and the latter is too restrictive and requires extensive efforts to provide access to all safe domains.

Other detection methods rely on heuristics. These methods are more accurate than blacklists, but they still fall short of providing optimal detection.

In 2020, a group of researchers at the University of Plymouth and the University of Portsmouth used binary visualization and deep learning to develop a novel method for detecting phishing websites .

The technique uses binary visualization libraries to transform website markup and source code into color values.

As is the case with benign and malign application files, when visualizing websites, unique patterns emerge that separate safe and malicious websites. The researchers write, “The legitimate site has a more detailed RGB value because it would be constructed from additional characters sourced from licenses, hyperlinks, and detailed data entry forms.

Whereas the phishing counterpart would generally contain a single or no CSS reference, multiple images rather than forms and a single login form with no security scripts. This would create a smaller data input string when scraped.”

The example below shows the visual representation of the code of the legitimate PayPal login compared to a fake phishing PayPal website.

The researchers created a dataset of images representing the code of legitimate and malicious websites and used it to train a classification machine learning model.

The architecture they used is MobileNet, a lightweight convolutional neural network (CNN) that is optimized to run on user devices instead of high-capacity cloud servers. CNNs are especially suited for computer vision tasks including image classification and object detection.

Once the model is trained, it is plugged into a phishing detection tool. When the user stumbles on a new website, it first checks whether the URL is included in its database of malicious domains. If it’s a new domain, then it is transformed through the visualization algorithm and run through the neural network to check if it has the patterns of malicious websites. This two-step architecture makes sure the system uses the speed of blacklist databases and the smart detection of the neural network–based phishing detection technique.

The researchers’ experiments showed that the technique could detect phishing websites with 94 percent accuracy. “Using visual representation techniques allows to obtain an insight into the structural differences between legitimate and phishing web pages. From our initial experimental results, the method seems promising and being able to fast detection of phishing attacker with high accuracy. Moreover, the method learns from the misclassifications and improves its efficiency,” the researchers wrote.

I recently spoke to Stavros Shiaeles , cybersecurity lecturer at the University of Portsmouth and co-author of both papers. According to Shiaeles, the researchers are now in the process of preparing the technique for adoption in real-world applications.

Shiaeles is also exploring the use of binary visualization and machine learning to detect malware traffic in IoT networks .

As machine learning continues to make progress, it will provide scientists new tools to address cybersecurity challenges. Binary visualization shows that with enough creativity and rigor, we can find novel solutions to old problems.

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech, and what we need to look out for. You can read the original article here .